Artificial intelligence: from digital blackface to prejudice policing, new dangers and ethical questions arise

Image: Shutterstock

Artificial intelligence (AI) has continued to make headlines as its advancements and practical usage have evolved to more mainstream outlets. The simplest definition of AI is the reproduction or imitation of human intelligence in machines that are programmed to act or perform tasks like humans would. Although it may not be immediately obvious, AI has already been integrated into many aspects of our everyday lives. It is in use when you unlock your iphone with face ID, it populates the ads that appear on your social media feeds, and even automates customer service interactions through chatbots. AI is also a major global financial contributor as a recent PwC report found that it could contribute $15 trillion to the world economy by 2030.

While overall the technology has promising potential across industries and societies, in the last few years there have been several examples of mainstream AI usage that have raised complicated questions, as well as serious ethical concerns.

Artistic theft

Towards the end of 2022, many social media feeds were flooded with ‘cartoonified’ versions of people’s photos. These were created courtesy of the new Lensa AI app which describes its services as “a brand new way of making your selfies look better than you could have ever imagined.”

While on the surface it seemed like harmless fun, many artists quickly pointed out that the reason this app is able to create such stunning digital replicas of people’s uploaded photos is because of hours of meticulous work done by real life creators. Apps like Lensa use technology known as stable diffusion which allows AI to scrape the internet, reviewing billions of images created by artists with distinct stylistic techniques, and then using sophisticated AI models mix them together to create new ones.

As one digital artist Meg Rae described it: “[The Lensa app] uses stable diffusion, an AI art model, to sample artwork from artists that never consented to their work being used. This is art theft”.

Artists also pointed out that with apps like Lensa pricing these images at such a low level, it undercuts their ability to charge fair prices for their work, posing a threat to their livelihood. Indeed the profits being generated from this AI are significant with the Lensa AI app making over $16 million in 2022, $8 million of which was generated in December alone when the ‘cartoonifying’ trend took off on social media.

Digital blackface

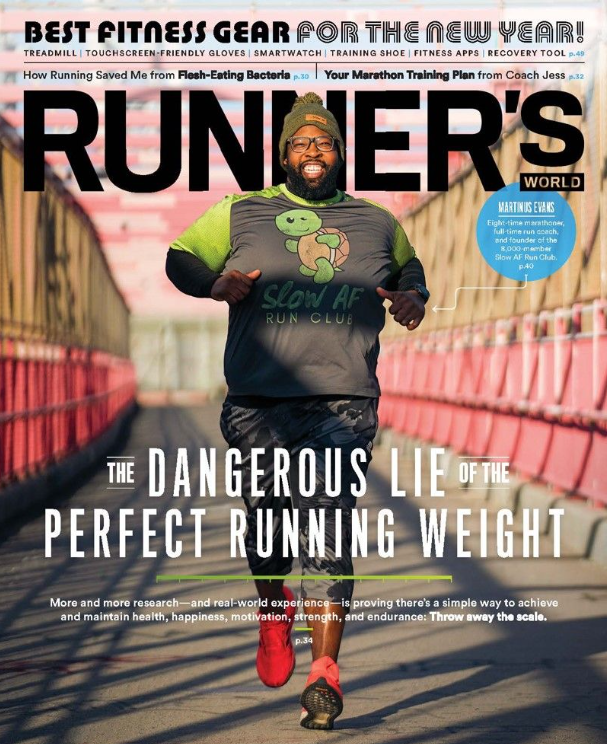

A similar instance of the exploitative usage of AI technology to generate huge profits can be seen in the cases of Shudu the AI model and FN Meka the AI rapper.

Boasting over 230,000 instagram followers, Shudu’s bio describes her as “the world’s first digital supermodel.” With an elaborate backstory, including her roots as a South African indigenous Black woman, her stunning face has been featured in major publications such as Vogue and V Magazine, and in big name collaborations with Louis Vuitton and other luxury brands. But despite her social media presence leaning heavily into her perceived blackness, she is the creation of the white British photographer Cameron-James Wilson who is reaping the profits of this model’s meteoric rise.

The case of the AI rapper FN Meka is similarly egregious in its exploitation of blackness for profit. As real life rapper Kyle The Hooligan explains it, he is the voice behind the “world’s first AI rapper.” He described in interviews how he was approached by Factory New, the media company who created FN Meka, with assurances of an opportunity to collaborate on new music. However, after lending his voice and creative material to this project, he claims that he was then completely cut out of the development and marketing process by Factory New. The rapper was shocked to see how they ended up using his intellectual property to create FN Meka.

“[They] use my voice, use my sound, use the culture and literally just left me high and dry,” Kyle The Hooligan explained.

At the height of his popularity, FN Meka had over 10 million TikTok followers, which was undoubtedly a motivating factor for Capitol Records who signed him to their record label in August 2022. However, following intense backlash from the hip hop community on the clear exploitation of Black culture to generate profit, the record deal was dissolved in less than a week. Capitol Records said in a statement: “We offer our deepest apologies to the Black community for our insensitivity in signing this project without asking enough questions about equity and the creative process behind it.”

Prejudiced policing

A more dangerous facet of AI that has potentially life threatening implications is facial recognition technology and its integration into policing. In November 2022, Randall Reid was wrongfully arrested for a theft that occurred in Louisiana, a state he had never been to before. He was released after spending six days in jail when the police department realized the facial recognition software that had identified Reid as a suspect had made an error.

There is a consistent lack of transparency when it comes to disclosing the extent to which police agencies are utilizing facial recognition technology. The general recommendation within police departments appears to be that the technology should be used only to generate leads that must be verified by officers. However, there have been several cases similar to Reid’s of wrongful facial identification leading to the arrest of innocent Black men in the past few years. For instance in February 2019, Nijeer Parks spent ten days in jail after police used facial recognition software to accuse him of shoplifting and attempting to hit a police officer with a car. He was in fact 30 miles away at the time of the incident.

Critics of facial recognition technology have presented evidence that it produces a systematically higher rate of misidentification of people of color than of white people. In 2018, Ghanaian-American researcher Dr. Joy Buolamwini of the Massachusetts Institute of Technology (MIT) found that harmful biases can be introduced into facial recognition technology based on data sets and algorithms created by computer scientists. She is featured in the documentary Coded Bias that explores the bias in algorithms and the significant effects this can have on our immediate future.

“This is not a case of one bad algorithm. What we’re seeing is a reflection of systemic racism in automated tools like artificial intelligence,” Dr. Buolamwini said. “We can’t leave it to companies to self-regulate…we absolutely need lawmakers.”

Legislating AI

Regulating AI is proving to be a significant challenge for lawmakers around the world as they struggle to create policies that can keep up with the rapidly changing technology. To assist policymakers, international bodies that typically provide guidance to governments such as the World Economic Forum and the Organisation for Economic Co-operation and Development (OECD) have developed ethical guidelines around AI technology.

In October 2022, President Joe Biden announced a new AI Bill of Rights outlining “principles that should guide the design, use, and deployment of automated systems to protect the American public in the age of artificial intelligence.” In January 2023, Congressman Ted Lieu wrote an opinion piece for the NY Times where he advocated for the creation of a nonpartisan AI Commission to provide recommendations on how to structure a federal agency to regulate AI. To date, there is still no federal legislation regulating AI in the United States.

In 2021, the European Commission released the AI Act that incorporates a “risk-based approach” to regulation. The AI Act includes provisions that would ban AI systems that violate individuals’ rights, including those that “exploit vulnerabilities of any group of people due to their age, physical, or mental disability in a manner that may cause psychological or physical harm.” The AI Act is currently undergoing negotiations among the European Union’s member states.

What are the solutions?

Dr. Buolamwini is the founder of the Algorithmic Justice League (AJL) an organization that is leading efforts to achieve equitable and accountable AI. AJL’s mission is to use art and research to raise awareness on AI, and empower advocates and policymakers to proactively prevent AI harm. AJL promotes four principles to counter AI harm: affirmative consent, that stipulates that everyone should be able to choose whether or not they wish to interact with AI; meaningful transparency between developers, businesses and the public; continuous oversight and accountability; and actionable critique that is informed by research.

As an important voice in the industry, Dr. Buolamwini aims to keep educating people on AI bias and the ways in which it can, and already has, permeated into our everyday lives leading to discrimination and exclusion. In her viral TED Talk: How I’m fighting bias in algorithms, she emphasizes the importance of the increased representation of women and people of color in coding as a way to continue addressing gender and racial bias in AI.

This underrepresentation is significant with a recent United Nations report finding that women make up only 22% of people working in AI today. During the development stages of this technology, there is a serious need to build diverse teams that deliberately include women and people of color who can actively contribute to inclusive coding, and identify the blindspots that their white, male colleagues may have.

As the AJL aptly puts it when describing the critical nature of its work, “the deeper we dig, the more remnants of prejudice we will find in our technology. We cannot afford to look away this time because the stakes are simply too high.”

SHOP THE CHANGEMAKER COLLECTION